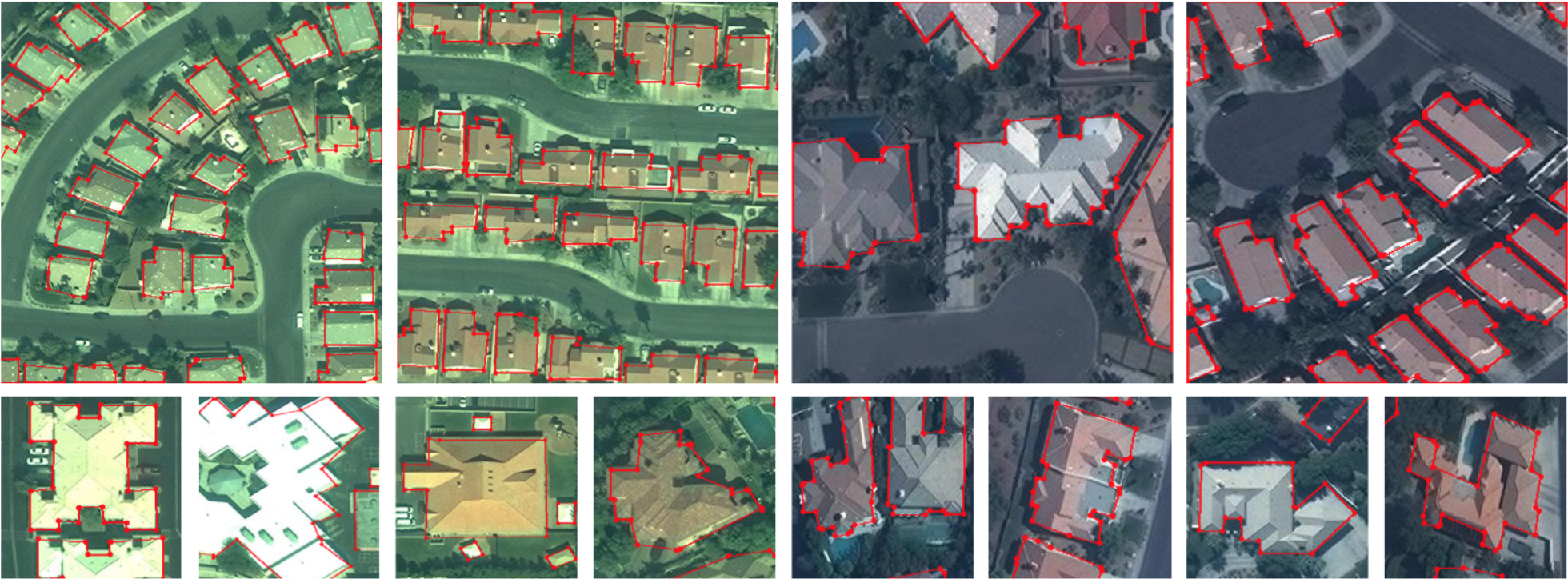

Joint Semantic-Geometric Learning for Polygonal Building Segmentation

Building extraction from aerial or satellite images has been an important research issue in remote sensing and computer vision domains for decades. Compared with pixel-wise semantic segmentation models that output raster building segmentation map, polygonal building segmentation approaches produce more realistic building polygons that are in the desirable vector format for practical applications. Despite the substantial efforts over recent years, state-of-the-art polygonal building segmentation methods still suffer from several limitations, e.g., (1) relying on a perfect segmentation map to guarantee the vectorization quality; (2) requiring a complex post-processing procedure; (3) generating inaccurate vertices with a fixed quantity, a wrong sequential order, inter-sections, etc. To tackle the above issues, in this paper, we propose a polygonal building segmentation approach and make the following contributions: (1) We design a multi-task segmentation network for joint semantic and geometric learning via three tasks, i.e., pixel-wise building segmentation, multi-class corner prediction, and edge orientation prediction. (2) We propose a simple but effective vertex generation module for transforming the segmentation contour into high-quality polygon vertices. (3) We further propose a polygon refinement network that automatically moves the polygon vertices into more accurate locations. Results on two popular building segmentation datasets demonstrate that our approach achieves significant improvements for both building instance segmentation (with 2% F1-score gain) and polygon vertex prediction (with 6% F1-score gain) compared with current state-of-the-art methods.